MMD: Towards Building Large Scale Multimodal Domain-Aware Conversation Systems

Abstract

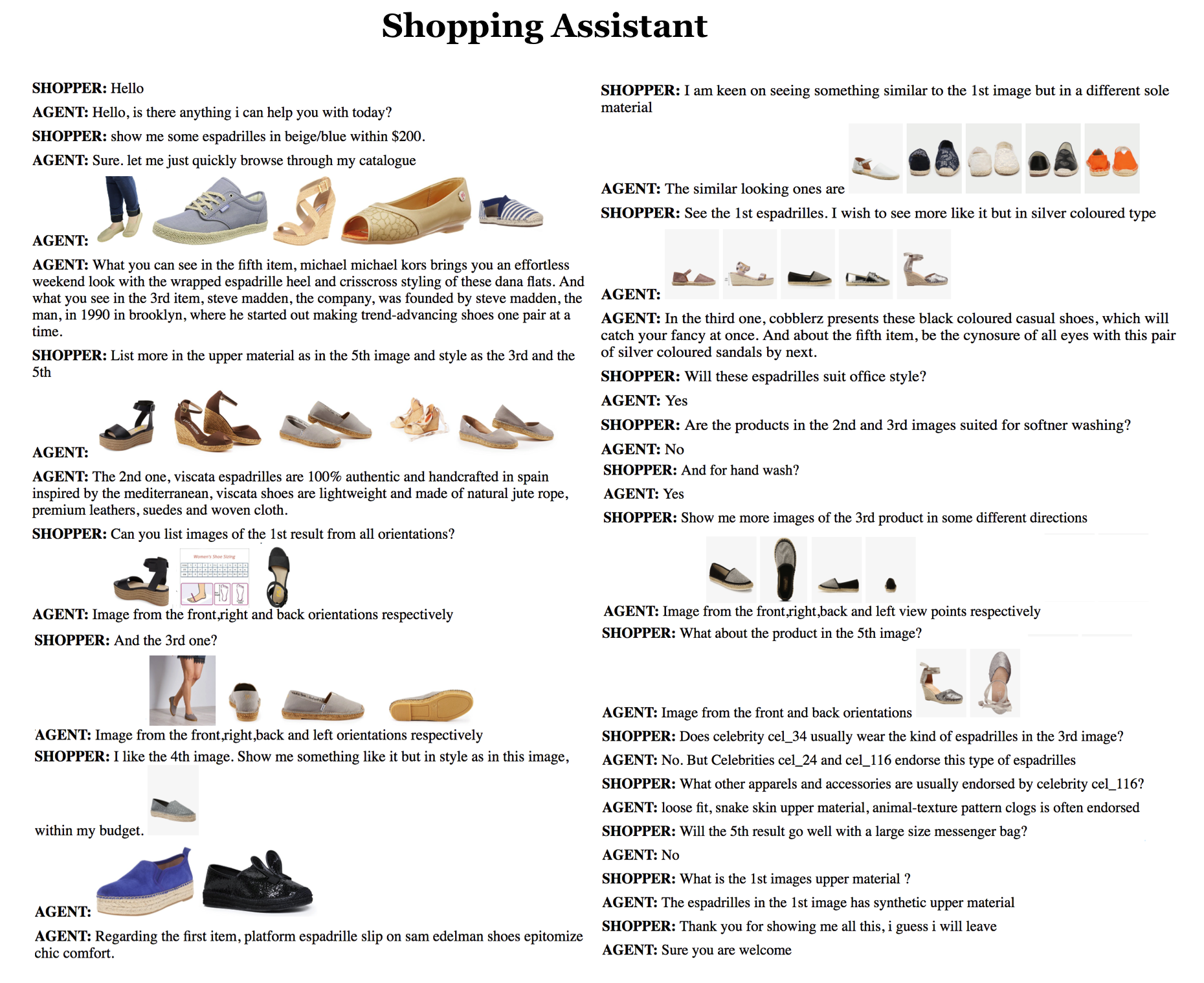

While multimodal conversation agents are gaining importance in several domains such as retail, travel etc., deep learning research in this area has been limited primarily due to the lack of availability of large-scale, open chatlogs. To overcome this bottleneck, in this paper we introduce the task of multimodal, domain-aware conversations, and propose the MMD benchmark dataset. This dataset was gathered by working in close coordination with large number of domain experts in the retail domain. These experts suggested various conversations flows and dialog states which are typically seen in multimodal conversations in the fashion domain. Keeping these flows and states in mind, we created a dataset consisting of over 150K conversation sessions between shoppers and sales agents, with the help of in-house annotators using a semi-automated manually intense iterative process.

With this dataset, we propose 5 new sub-tasks for multimodal conversations along with their evaluation methodology. We also propose two multimodal neural models in the encode-attend-decode paradigm and demonstrate their performance on two of the sub-tasks, namely text response generation and best image response selection. These experiments serve to establish baseline performance and open new research directions for each of these sub-tasks. Further, for each of the sub-tasks, we present a 'per-state evaluation' of 9 most significant dialog states, which would enable more focused research into understanding the challenges and complexities involved in each of these states.

CODE

Github Repository for the code repo link

PAPER

Please download the paper here paper link

AAAI 2018 SLIDES

Please download the slides here slides link

BIBTEX

@article{1704.00200,

Author = {Amrita Saha and Mitesh M. Khapra and Karthik Sankaranarayanan},

Title = {Towards Building Large Scale Multimodal Domain-Aware Conversation Systems},

Year = {2017},

Eprint = {arXiv:1704.00200},

}

DATASET

Please Click here to download the dataset.

EXAMPLE

Multi-Fold Challenges of this Dataset

| Type of Complexity | Example Dialog State | Example Utterance |

|---|---|---|

| Long-Term Dialog Context | At the beginning of the dialog the user mentions his budget or size preference and after a few utterances, asks the agent to show something under his budget or size | I like the 4th image. Show me something like it but in style as in this image within my budget. |

| Quantitative Inferencing (Counting) | User points to the nth item displayed and asks a question about it | Show me more images of the 3rd product in some different directions |

| Quantitative Inferencing (Sorting/Filtering) | User wants sorting/filtering of a list based on a numerical field, e.g. price or product rating | Show me something like it but in style as in this image within my budget. |

| Logical Inference | User likes one fashion attribute of the nth image displayed but does not like another attribute of the same | I am keen on seeing something similar to the 1st image but in a different sole material |

| Visual Inference | System adds a visual description of the product alongside the images | Viscata shoes are lightweight and made of natural jute, premium leather, suedes and woven cloth |

| Collective inference over multiple Images | User?s question can have multiple aspects, drawn from multiple images displayed in the current or past context | List more in the upper material of the 5th image and style as the 3rd and the 5th |

| Multimodal Inference | User gives partial information in form of images and text in the context | See the first espadrille. I wish to see more like it but in a silver colored type |

| Inference using domain knowledge and context | Sometimes inferences for the user?s questions go beyond the dialog context to understanding the domain | Will the 5th result go well with a large sized messenger bag? |

| Coreference or Ellipses Resolution | Temporal continuity between successive questions from the user may cause some of them to be incomplete or to refer to items or aspects mentioned previously | Show me the 3rd product in some different directions ... What about the product in the 5th image? |